Dask presentation and tutorials

Guillaume Eynard-Bontemps and Emmanuelle Sarrazin, CNES (Centre National d’Etudes Spatiales - French Space Agency)

2026

Dask

What Dask is for ?

- Problem: Python is powerful and user friendly but it doesn’t scale well

- Solution: Dask enables to scale Python natively

What is Dask ?

Python library for parallel and distributed computing

- Scales Numpy, Pandas and Scikit-Learn

- General purpose computing/parallelization framework

Why use Dask ?

- Allow to process data than is larger than available memory for a single machine

- Parallel execution for faster processing

- Distribute computation for large datasets

How to use Dask ?

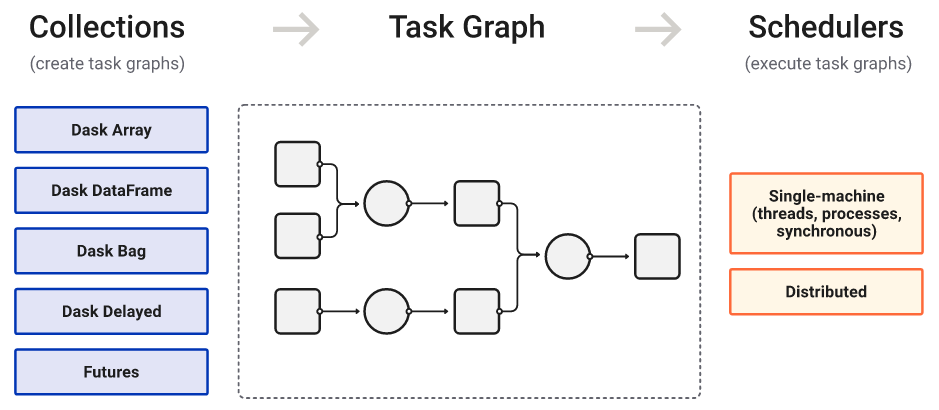

Dask provides several APIs

- Dataframes

- Arrays

- Bags

- Delayed

- Futures

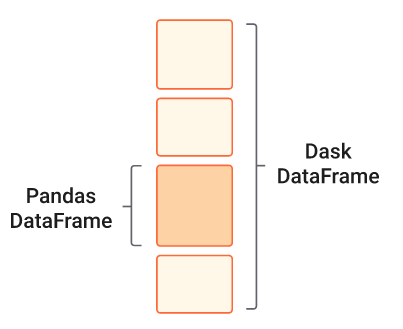

Dataframes

- Extends Pandas library

- Enables to parallelize Pandas Dataframes operations

- Similar to Apache Spark

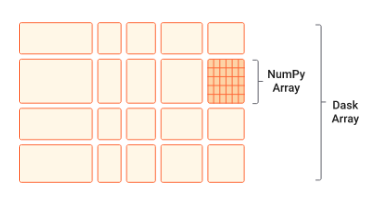

Arrays

Bags

- Allow to process in parallel Python lists, commonly used to process text or raw Python objects

- Offer map and reduce functionalities

- Similar to Spark RDDs or vanilla Python data structures and iterators

Delayed

- Allow to construct custom pipelines and workflows

- Enables to parallelize arbitrary for-loop style Python code

- Parallelize and distribute tasks

- Lazy task scheduling

- Similar to Airflow

from dask.distributed import LocalCluster

client = LocalCluster().get_client()

# Submit work to happen in parallel

results = []

for filename in filenames:

data = client.submit(load, filename)

result = client.submit(process, data)

results.append(result)

# Gather results back to local computer

results = client.gather(results)Futures

- Extends Python’s concurrent.futures interface for real-time

- Allow to scale generic Python workflows across a Dask cluster with minimal code changes

- Immediate task scheduling

Two levels of API

High-level

- Parallel version of popular library

- Scale Numpy, Pandas

- Similar to Spark

Low-level

- Distributed real-time scheduling

- Scale custom workflows

- Similar to Airflow

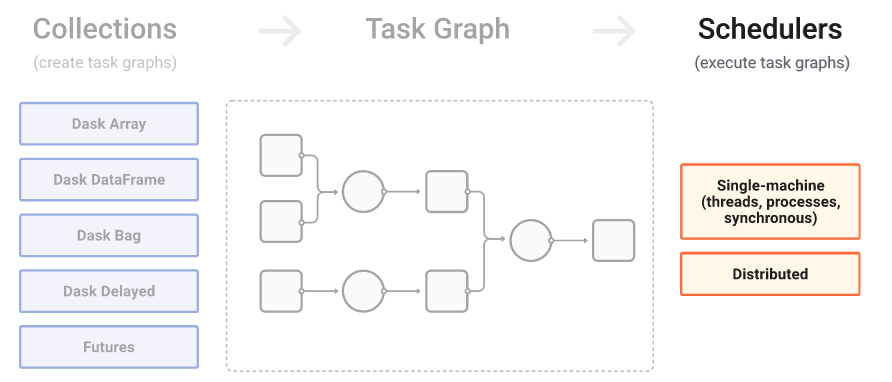

How Dask works ?

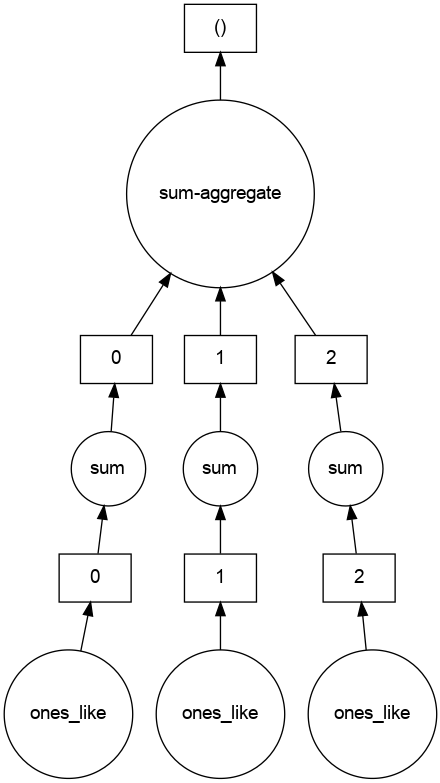

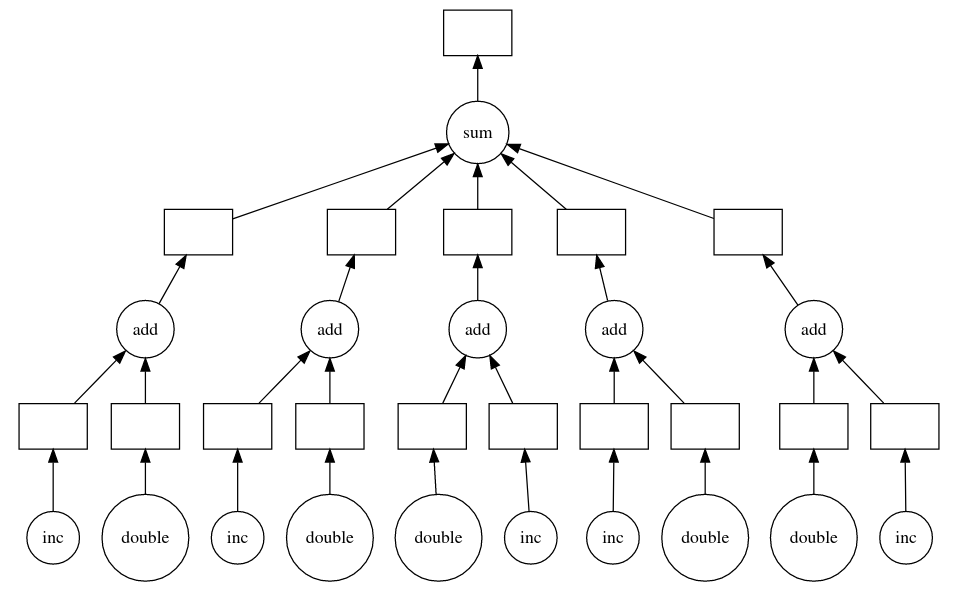

First, produce a task graph

High level collections are used to generate task graphs

First, produce a task graph

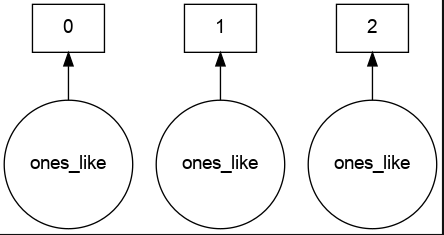

Create an array of ones

First, produce a task graph

First, produce a task graph

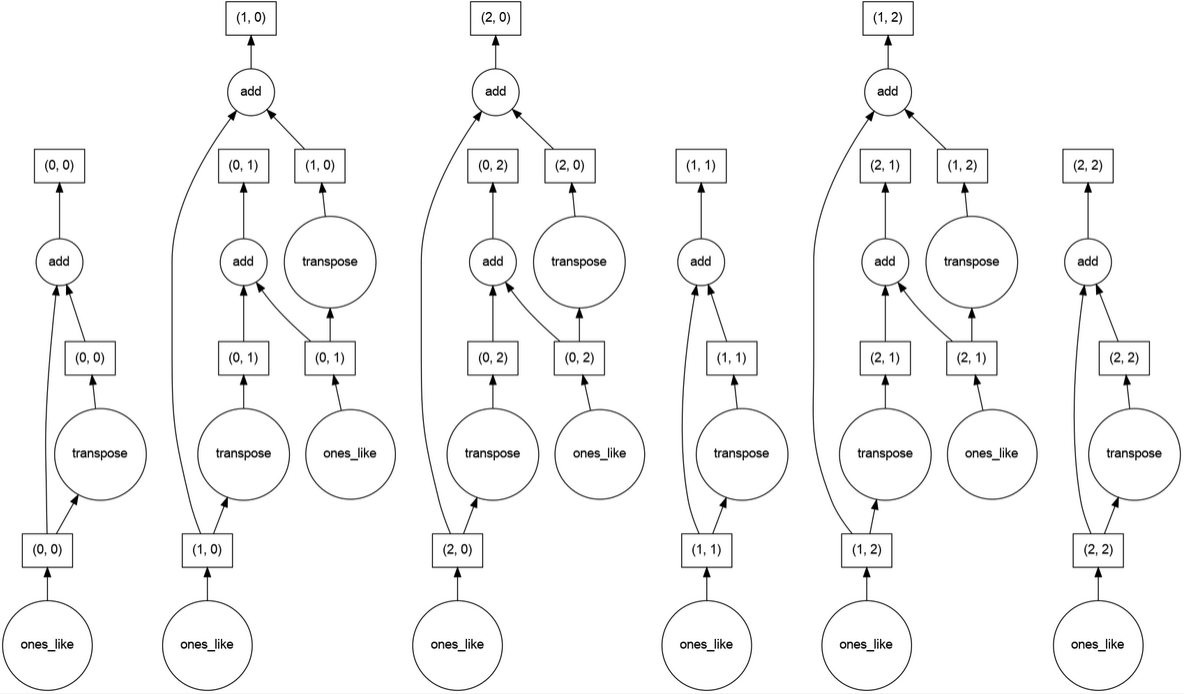

Create an 2d-array of ones and sum it

First, produce a task graph

Add array to its transpose

First, produce a task graph

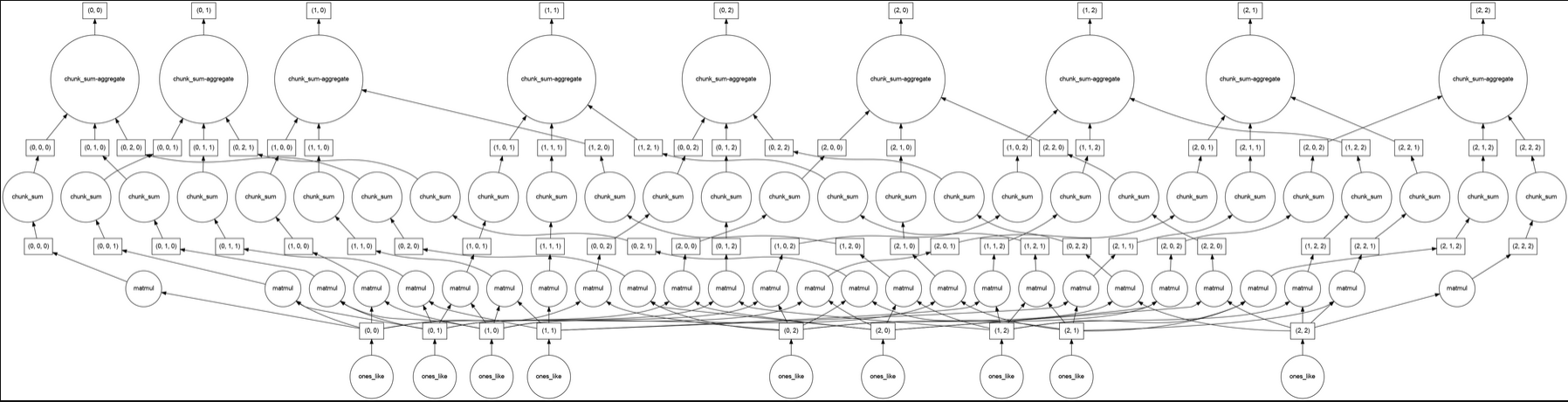

Matrix multiplication

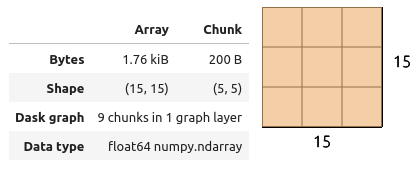

import dask.array as da

x = da.ones((15,15), chunks=(5,5))

y = da.ones((15,15), chunks=(5,5))

r = da.matmul(x,y)

Dask graph

Dask graph

- Every operations/tasks submit to Dask are turned to a graph

- Dask is lazily evaluated

- The real computation is performed by executing the graph

Then, compute the calculation

Use compute() to execute the graph and get the

result

Then, compute the calculation

Compute() method

- The method allows to compute the result of a Dask collection or a Future object

- The method blocks until the computation is complete and returns the result.

Persist() method

- The method allows to persist the computation of a Dask collection or a Future object in the worker’s memory.

- This can be useful for large datasets that are used multiple times in a computation, as it avoids recomputing the same data multiple times.

How to deploy ?

Dask execution

- Task graphs can be executed by schedulers on a single machine or a cluster

- Dask offers several backend execution systems, resilience to failures

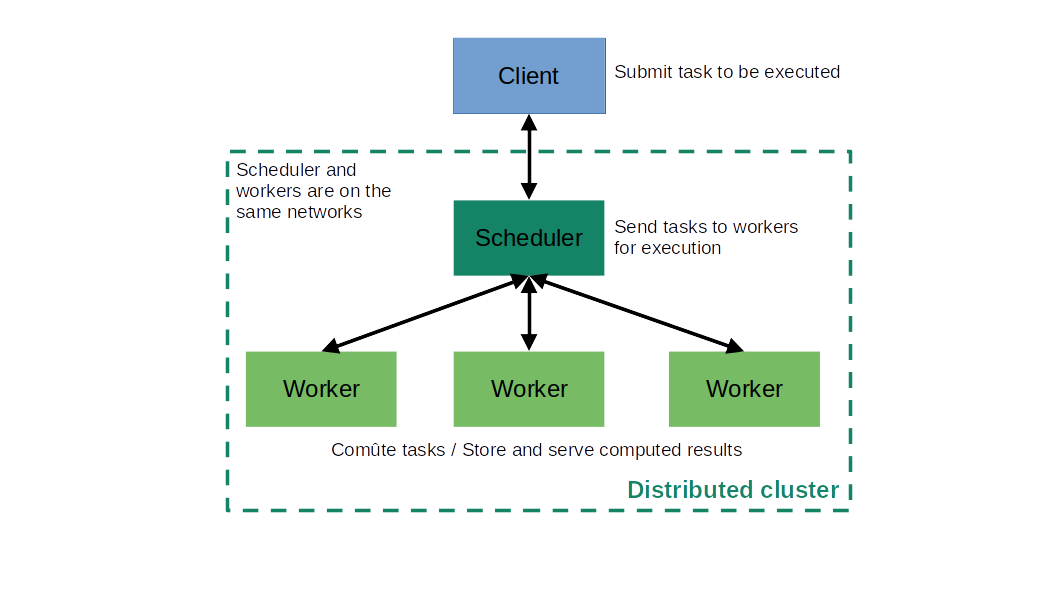

Dask execution

Dask execution

- Client: interacts with the Dask cluster, submits task

- Scheduler: is in charge of executing the Dask graph, sends task to the workers

- Workers: compute tasks as directed by the scheduler, store and serve computed results to other workers or clients

Local execution

- Deploy Dask cluster on a single machine

- Configure to use threads or multiprocessing

Distributed execution

- Deploy Dask cluster on a distributed hardware

- Dask can work with:

- popular HPC job submission systems like SLURM, PBS, SGE, LSF, Torque, Condor

- Kubernetes

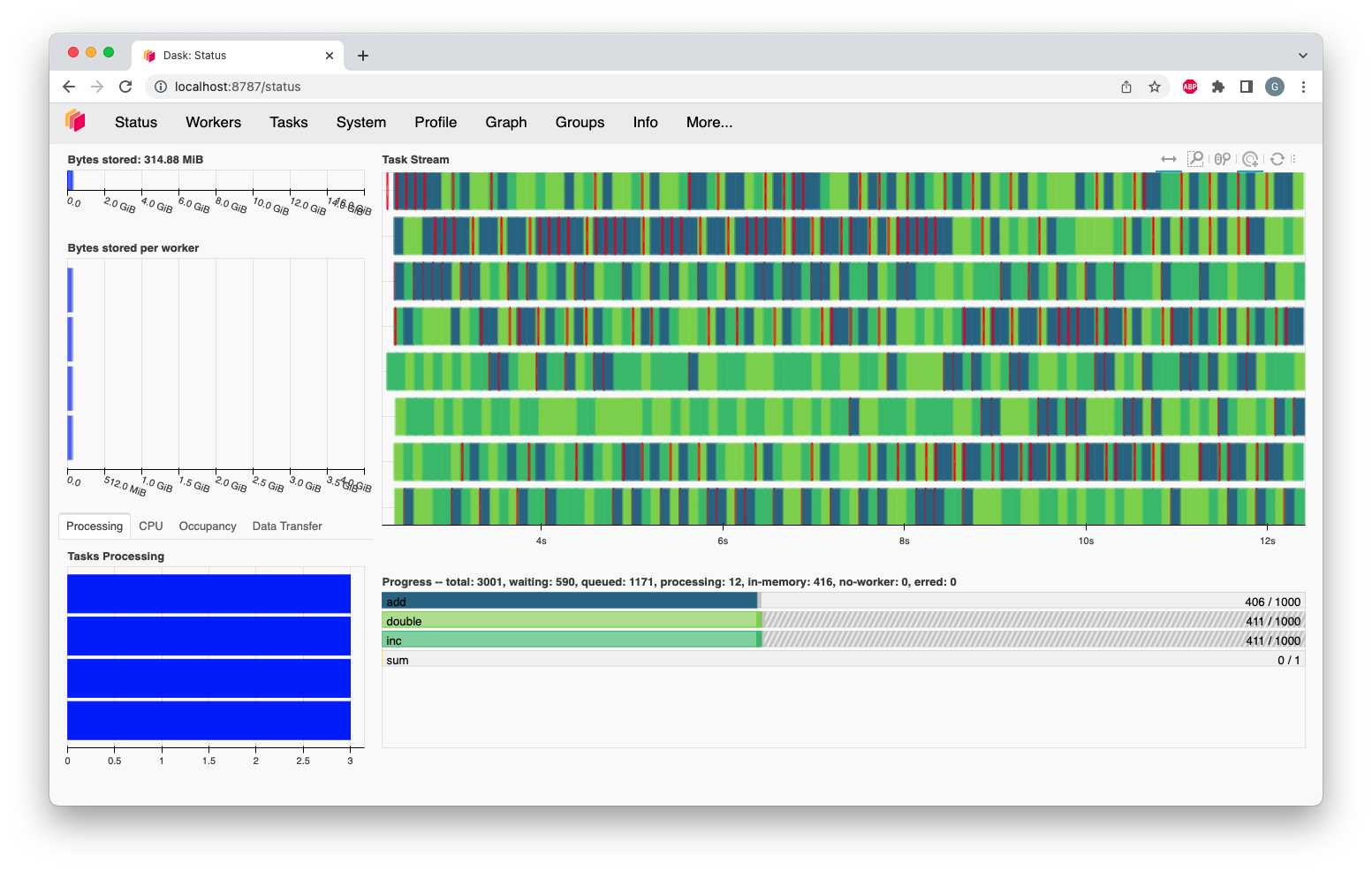

Use Dashboard

- Help to understand the state of your workers

- Follow worker memory consumption

- Follow CPU Utilization

- Follow data Transfer between workers

Dask and machine learning

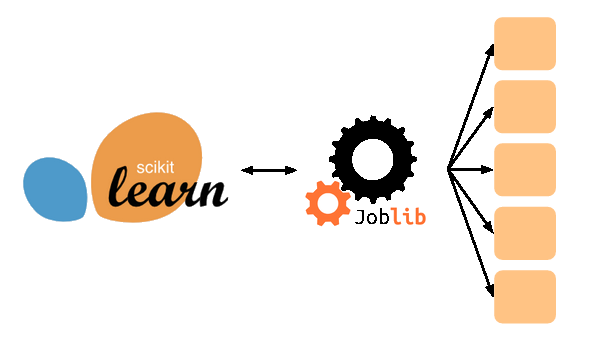

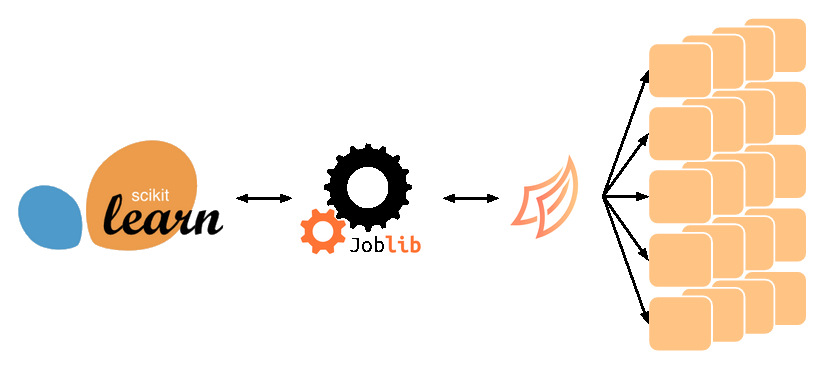

Scikit-learn/Joblib

Scikit-learn/Joblib/Dask

Dask-ML

- Provides scalable machine learning alongside popular machine learning libraries

- Work with

- Scikit-Learn,

- XGBoost

- PyTorch

- Tensorflow/Keras

How to install ?

via pip

Extras package

- Use Dask on queuing systems like PBS, Slurm, MOAB, SGE, LSF, and HTCondor.

- Use Dask on Kubernetes

- Use Dask with machine learning framework

Try Dask

Dask Tutorial

Try to follow by order of importance:

- Dask Dataframes

- Distributed

- Delayed

- Parallel and Distributed Machine Learning

- Next, if you have more time

- Array

- Futures

Pangeo tutorial or finish deploying your computing platform

or

Finish yesterday deployment (needed for tomorrow).